Are you looking for how to build ETL using Python? Let us explore options for tools, methods, libraries, and alternatives here.

The whole process of creating data pipelines, data ingestion, data wrangling, and eventually the end of ETL into more meaningful storage aims to gather and harmonize the entire enterprise data to be utilized further to draw insights and make critical business decisions.

Famously Data Analysts and Data Engineers use their strong suits such as python programming to customize the standard ETL solutions available and go beyond and build their own data pipelines using programming in Python for ETL.

What is Python

Python is a very general-purpose programming language. It was created so that it is easy for everyone to read and write. It is known to be high-level and interpreted language. Developers love it for its easy syntax and dynamic semantics. With the rise of AI and Machine learning, Python became popular as it is easy to learn, read and understand and use.

It is an open-source language. Modifications by a developer are easy.

Python supports portability so that the code can be easily shared across developers.

Python is compatible with languages as well – this is unique since it can accommodate existing code easily. It has huge library support. Python supports Oops and is safe and secure to be used. No wonder Python is the developer’s choice for building data pipelines for ETL.

Java, Ruby etc. languages are also used for a similar process.

For using Python ETL

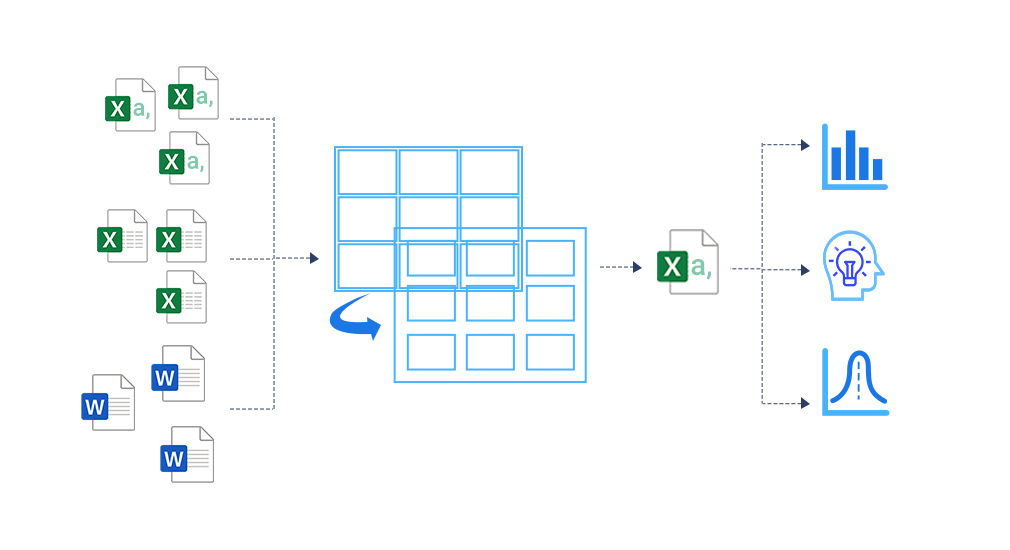

- Identify the use — Multiple ERP systems.

- Transform the data — Pandas library

- Generate a harmonized file –a summarized uniform CSV file.

- Apply analytics and data science algorithms to extract insights.

With the emerging technologies and developers continuously breaking and making copies of Python, there are way too many ETL tools available for ETL using Python.

Let’s take a look at a few of them.

ETL offering

Bonobo

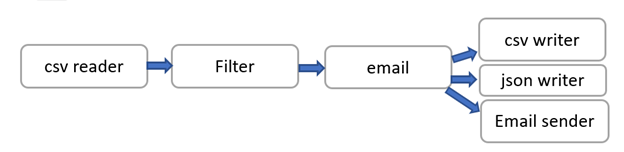

Bonobo is a popular simple ETL framework. It provides tools for building data pipelines for ETL that can process multiple data sources in different formats including CSV, JSON, XML, XLS, SQL, etc. in parallel. It has plugins to display the status of ETL jobs. It is suitable for simple, lightweight ETL jobs done.

Bonobo is simply Python; it can enable job monitoring; it can help enhance graphs using JSON writer and email sender to enable notification broadcasting.

This is still a work in progress.

Mara

Mara framework appears to be in between the writing scripts and apache airflow framework.

Mara is simple and easy if you have:

- Using PostgreSQL as the data processing engine

- Using declarative Python code to define data integration pipelines

- Using the command line as the main tool for interacting with databases

- Using UI from Mara.

This is still a work in progress.

Pygrametl

It is a python programmed framework that offers most of the commonly used functionalities to develop ETL processes. This is a continuously evolving framework. Includes interfacing between different data sources, running parallel data processing, or creating snowflake schemas.

Easy to integrate with other python scripts.

This library has been there for a long time, but it has not been used a lot.

Workflow management

Apache Airflow

It is an open-source automation tool built using Python.

A typical Airflow setup: Metadata DB> Scheduler > Executor > Workers

It uses all Python features to create workflows, including date-time formats for scheduling tasks and loops to dynamically generate tasks. Mostly used for building machine learning models, transferring data or managing data infrastructure.

Luigi

It is an automation tool. It is used to build batch processing complex data pipelines. It offers a relatively better visualization tool. Debugging is easy because there’s a feature that helps failure recovery.

Data processing and Transfer

P-ETL

Python ETL offers harmonization of data from various sources (CSV, XLS, HTML, TXT, JSON, etc.) into one single table or any other output format required.

Pandas Library

This is one of the most popular libraries in Python mostly used in data science. It is a fast, flexible and easy tool for data analysis and data manipulation. It does most of the processing in memory, and hence it is a bit slow. It offers better data alignment to fill up for missing data hence a very good fit for building ETL.

There are many such tools listed below:

- Spark

- Pyspark

- Odo

- ETLAlchemy

- Riko

- Locopy

- Hybrid Option – using one or more tools together to make the most of them is another good idea.

Let us know your thoughts about building ETL using Python in the comments below.

Make ETL simple with Daton

Daton is a robust tool for replicating data to a data warehouse. Daton is a simple data pipeline that can populate popular data warehouses like Snowflake, Google BigQuery, and Amazon Redshift and acts as a bridge to data mining, data analytics, and business intelligence. Daton allows anyone to set up a data pipeline with a few clicks instead of thousands of lines of Python code. Try Daton Now