The milestone of setting up a data warehouse for an organization itself is a significant achievement! And of course, the next phase comes with its challenges and is called C Warehouse Maintenance. Traditionally when we used to think about a data warehouse, we used to focus on essential aspects of it such as database and server, integration, and reporting analysis services on top of it.

We all know that as time passes the size of data volume an organization store grows exponentially. In every direction like the number of users and concurrent users, highly complex analytics for business decisions and the data warehouse has to support both faster load time and quicker response time at the same time. That’s a problem that almost everyone is trying to solve.

While building the data warehouse itself, it should not be a single and monolithic setup. Similarly setting up many independent data marts also is not a very great idea since each solution developed will end up acting in the data silo and no use for any further repurposing of the same data.

Scalability and flexibility are not that easy to achieve. Hence, there are some general rules followed such as choosing available latest technologies and methodologies to manage the expected growth and flexibility, managing ever-growing large volumes of data, ensuring optimized and accepted performance to meet business needs, and on top of that flexibility to be able to deploy new data marts as well as keeping in sync with the existing data warehouse model.

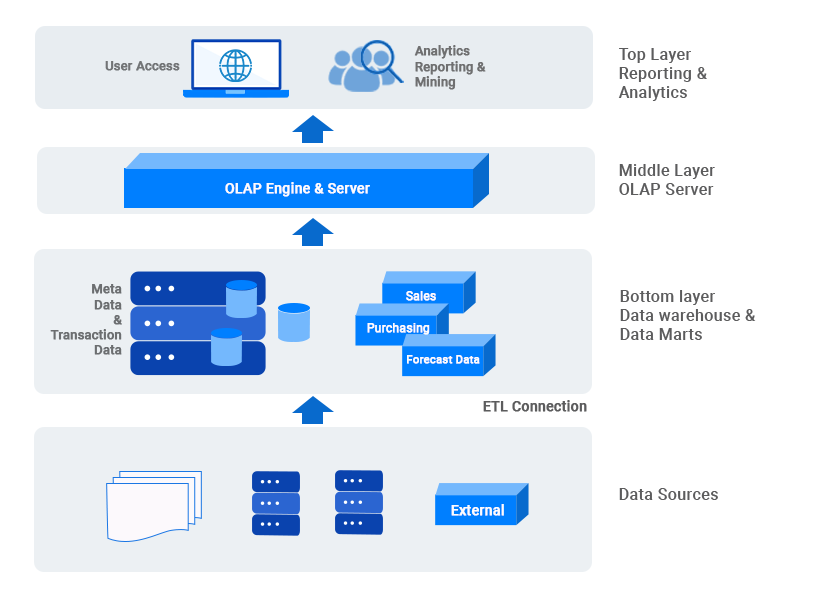

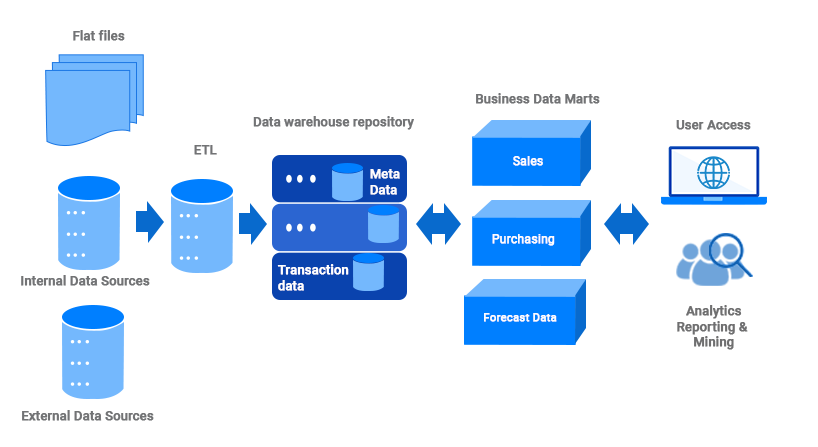

3 Layers of Data Warehouses

Bottom Layer

- Contains the database and server and ways to integrate data.

- It can also be a centralized data warehouse or multidimensional model for direct access and querying.

Middle Layer

- Generally OLAP engine that serves as a baseline for Top Layer.

Top Layer

- Contains tools for reporting and analytics.

Best Practices on Building a Data Warehouse

- Beginning with the end goal and scope of business requirements always helps

- Gathering of all relevant information

- Identifying the problem statement i.e. issue to be targeted.

- Designing a scalable and flexible data model on paper.

- Mapping required data sources from various locations and defining logic for required metrics and their specifications.

- Preparing a detailed plan for the execution of implementation.

- Project Execution based on agreed methodology.

Building a Scalable Database for a Data Warehouse

A scalable database is one that can meet the increasing demands of storing and processing massive volumes of data in a data warehouse setting.

Here are Several Best Practices for Creating a Scalable Data Warehouse Database:

Data Partitioning: It’s the process of dividing data into logical partitions depending on a criterion such as time or data range. This enables data to be distributed across numerous storage devices or servers, allowing for parallel processing and increased query performance.

Distributed Processing: Use a distributed architecture that allows the processing workload to be distributed among numerous nodes or servers. This ensures that the database can manage high query volumes while also performing complicated processes in parallel, hence improving scalability.

Indexing and Data Compression: Use efficient data compression techniques to reduce storage requirements and increase query performance. Use proper indexing schemes to optimise query performance and reduce the requirement for complete table scans. This could include leveraging modular storage systems, distributed file systems, or cloud-based infrastructure that allows for on-demand resource addition and removal.

Query Optimisation: Analyse and optimise queries conducted on the data warehouse on a regular basis to guarantee optimum resource use. This entails locating and removing bottlenecks or inefficiencies in query execution plans.

Implement Data Replication and Backup: This procedure is required to ensure data availability and fault tolerance. Keeping redundant copies of data on several servers or locations protects against data loss and increases system reliability.

Monitoring and Tuning: Constantly monitor the database’s performance and measure critical performance parameters such as query response times, resource utilisation, and system throughput. Use this data to discover performance issues and apply tuning approaches to improve the scalability of the database.

Best Practices for Maintaining a Data Warehouse

Addition of New Metrics to be Derived

This need is essential, and it comes with continuously evolving business processes. Over time processes, people, customers, and market trends change, and the need for tracking new metrics arise.

The addition of new metrics has a few simple steps, Adding the definition in the backend schema and updating all relevant tables with new columns. Along with the adjustment of tables and views, updating the data can be a problem when backfilling needs to be done.

At times the history data is updated by backdating information and reloaded if possible wherever possible or left for future updates only, it depends on specific business requirements. Here if we have detailed documentation on technical specs along with logic and definition and naming conventions helps a lot.

Updating or Removing Some Old KPIs

Similar to adding new metrics, updating or removing old ones which are not relevant anymore is also of crucial importance to keep pertinent data and performance up to the mark. There are two ways of handling the same.

The outdated metrics can be deactivated by either renaming existing ones ensuring the naming convention is followed and pausing the data update in integration or dropping the data if feasible and setting them up as not set with the correct naming convention. Again the technical specs come in handy here as well.

Performance Tuning

Performance Tuning is as essential to ensure performance is optimal. Reviewing the DB size, and configuration settings frequently helps maintain the data warehouse.

Refreshing indexes once in a while keeps the database in check and so as the Data warehouse. Archival and frequent clearing of historical data and log records help optimize space too.

Security Check-Ins and Access Control

The settings and access control should always be up to date.

Conclusion

Choosing the best cloud data warehouse for your business can be overwhelming, as many variables can impact the successful deployment of a system. Despite this, by considering expected use cases and workflows, an enterprise can evaluate the relevant factors and select the warehouse that best fits its needs.

Daton is an automated data pipeline that extracts from multiple sources to data lakes or cloud data warehouses like Snowflake, Google Bigquery, Amazon Redshift where employees can use it for business intelligence and data analytics. The best part is that Daton is easy to set up without the need for any coding experience and it is the cheapest data pipeline available in the market. Sign up for a free trial of Daton today!!